The Free Internet Project makes the following public service announcements to provide the public with information and tips to avoid election misinformation, including the potential for fake election results and fake news of voting irregularities to delegitimize the election. We plan on sharing these public service announcements on Facebook, Instagram, Twitter, and other social media. We invite you to share them as well. You can download all the Public Service Announcements by visting this page.

Reason for The Free Internet Project's Public Service Announcements on Election Misinformation

Social media companies including Facebook, Instagram, Twitter, YouTube, TikTok, Pinterest, Reddit, and other companies have content moderation policies that prohibit election misinformation and voter suppression on their platforms in anticipation of the 2020 U.S. elections. These companies fear a repeat of the Russian interference in the 2016 U.S. election. As the bipartisan U.S. Senate Select Committee on Intelligence reported in thousands of pages of its report on "Russian Active Measures Campaigns and Interference in the 2016 U.S. Election," the Russian operative group Internet Research Agency used American-based social media platforms to interfere with the 2016 election. "Masquerading as Americans, these operatives used targeted advertisements, intentionally falsified news articles, self-generated content, and social media platform tools to interact with and attempt to deceive tens of millions of social media users in the United States. This campaign sought to polarize Americans on the basis of societal, ideological, and racial differences, provoked real world events, and was part of a foreign government's covert support of Russia's favored candidate in the U.S. presidential election." (Vol. 2, p. 3).

Facebook, Instagram, Twitter, YouTube, and other social media companies are now working hard to protect American voters from the same kind of fake news and fake content exploited by the Russian operatives in the 2016 election. But no social media platform is entirely immune from such foreign interference or attacks. On September 22, 2020, the FBI and the Cybersecurity and Infrastructure Security Agency (CISA) issued a public service announcement to warn Americans of a new kind of worry for the 2020 election: "the potential threat posed by attempts to spread disinformation regarding the results of the 2020 elections."

Our goal at The Free Internet Project is to help Americans avoid election misinformation and fake election results. We provide basic information explaining what election misinformation is, give several examples of fake ads and fake accounts used by Russian operatives in the 2016 election, and offer several tips for people to protect themselves on social media.

Explanation of The Free Internet Project's Public Service Announcements on How to Avoid Election Misinformation

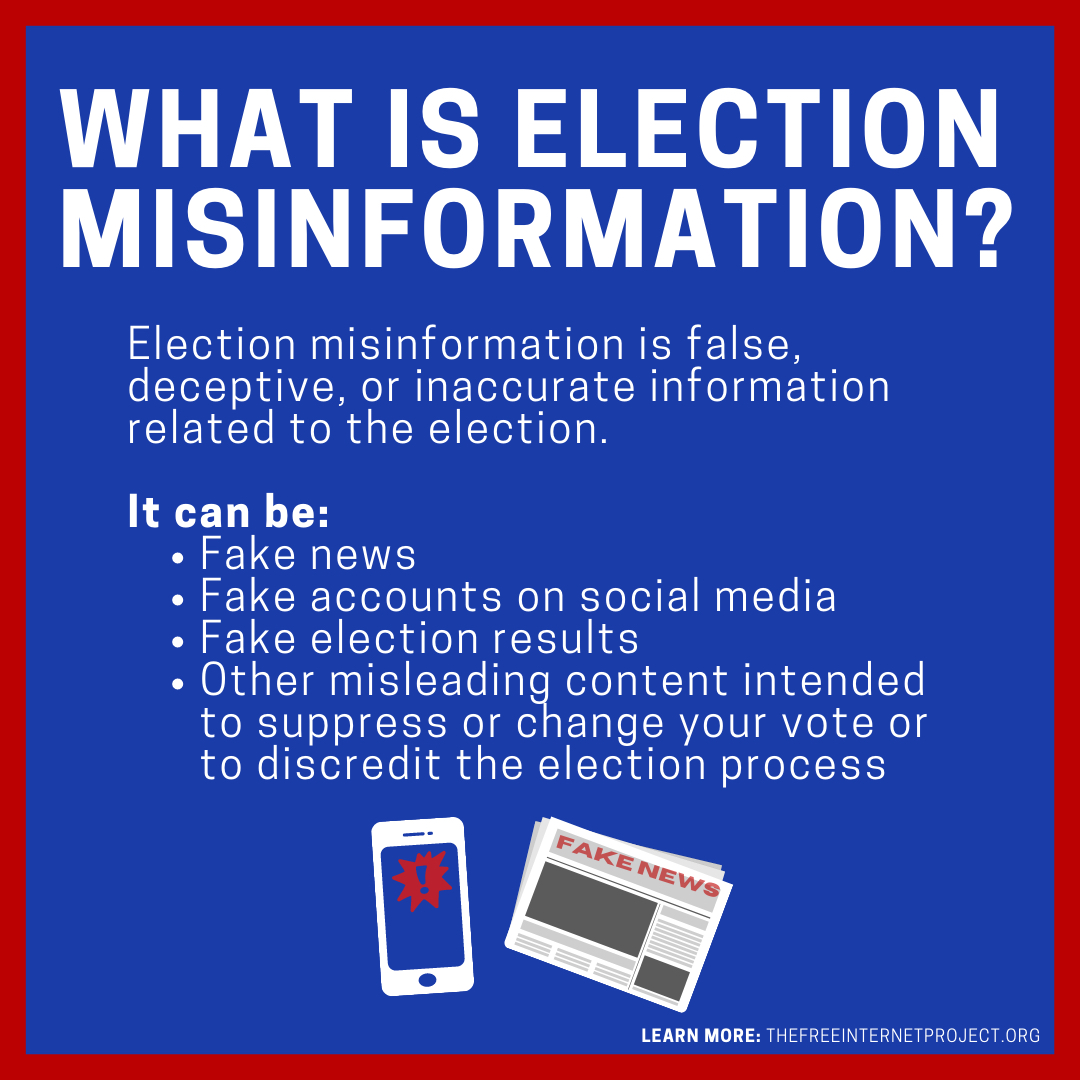

1. Definition of election misinformation

We first define "election misinformation": "Election misinformation is false, deceptive, or inaccurate information related to the election." It can come in the form of fake news, fake accounts, fake election results, or other misleading content intended to suppress or change your vote, or to discredit the election process. Americans should understand that some election misinformation may expressly include false or misleading claims about the candidates, the voting process, mail-in ballots, voter fraud or purported irregularities in voting, or the election results (who won). But some election misinformation doesn't even mention the election itself, but instead comes in the form of fake content posted from fake accounts on social media intended to make you believe they share your views. These fake accounts pose as Americans supporting particular and often popular causes, such as racial justice, Second Amendment gun rights, support for the police, and LGBTQ rights. In the 2016 U.S. election, Russian operatives used these kinds of fake American accounts to sow discord in U.S. and polarize voters. Volume 2 of the bipartisan Report of the Select Committee on Intelligence United States Senate on Russian Active Measures Campaigns and Interference in the 2016 U.S. Election: Russia's Use of Social Media with Additional View, especially pp. 32-71, is the best source to read for examples of the Russian operatives' extensive election misinformation. This year, the FBI and CISA have special concern about foreign interference that may seek to spread fake election results and fake news about voter fraud or other purported irregularities in voting, in order to cast doubt on the verified election results from the states. We discuss this concern in No. 4 below. The key to remember is that election misinformation comes in many forms--possibly even new forms that won't be easy to recognize or detect. Beware.

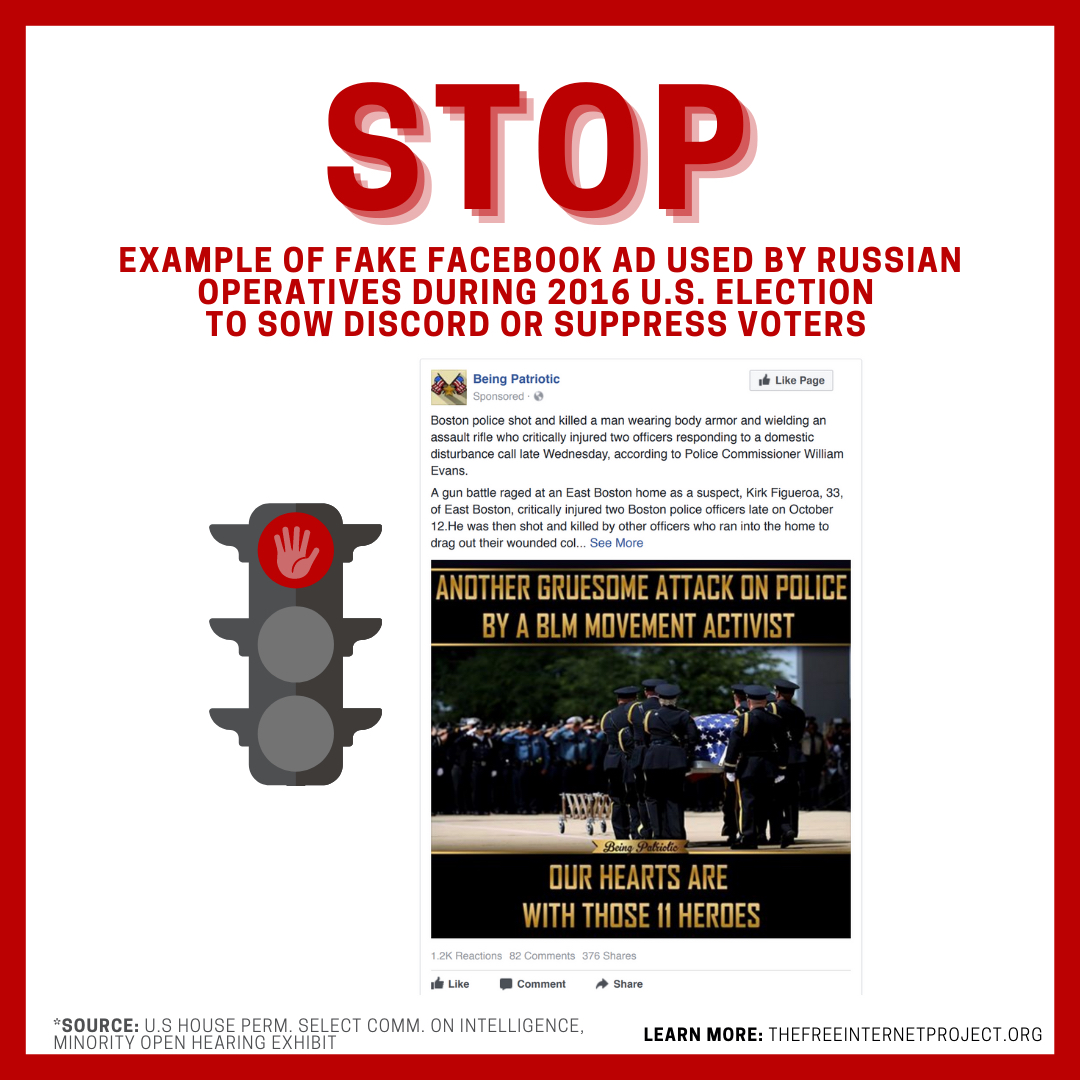

2. Stop: Don't rely on or share unverified sources on social media. They can be fake accounts, manipulated videos, and other misleading content intended to suppress or change your vote, or to delegitimize the election.

In their Sept. 22, 2020 public service announcement, "the FBI and CISA urge the American public to critically evaluate the sources of the information they consume and to seek out reliable and verified information from trusted sources, such as state and local election officials." Social media companies, including Facebook, Instagram, and Twitter, have set up voting or election information centers for their users that contain trusted sources identified by those companies. One of the safest practices for social media is to rely only on sources that you know are verified trusted sources. Some companies verify users with a check mark inside a blue circle, but just because users have that symbol does not mean that they are a trusted source of news or election information. Another safe practice is to rely on multiple trusted sources before you reach any conclusions about a news report.

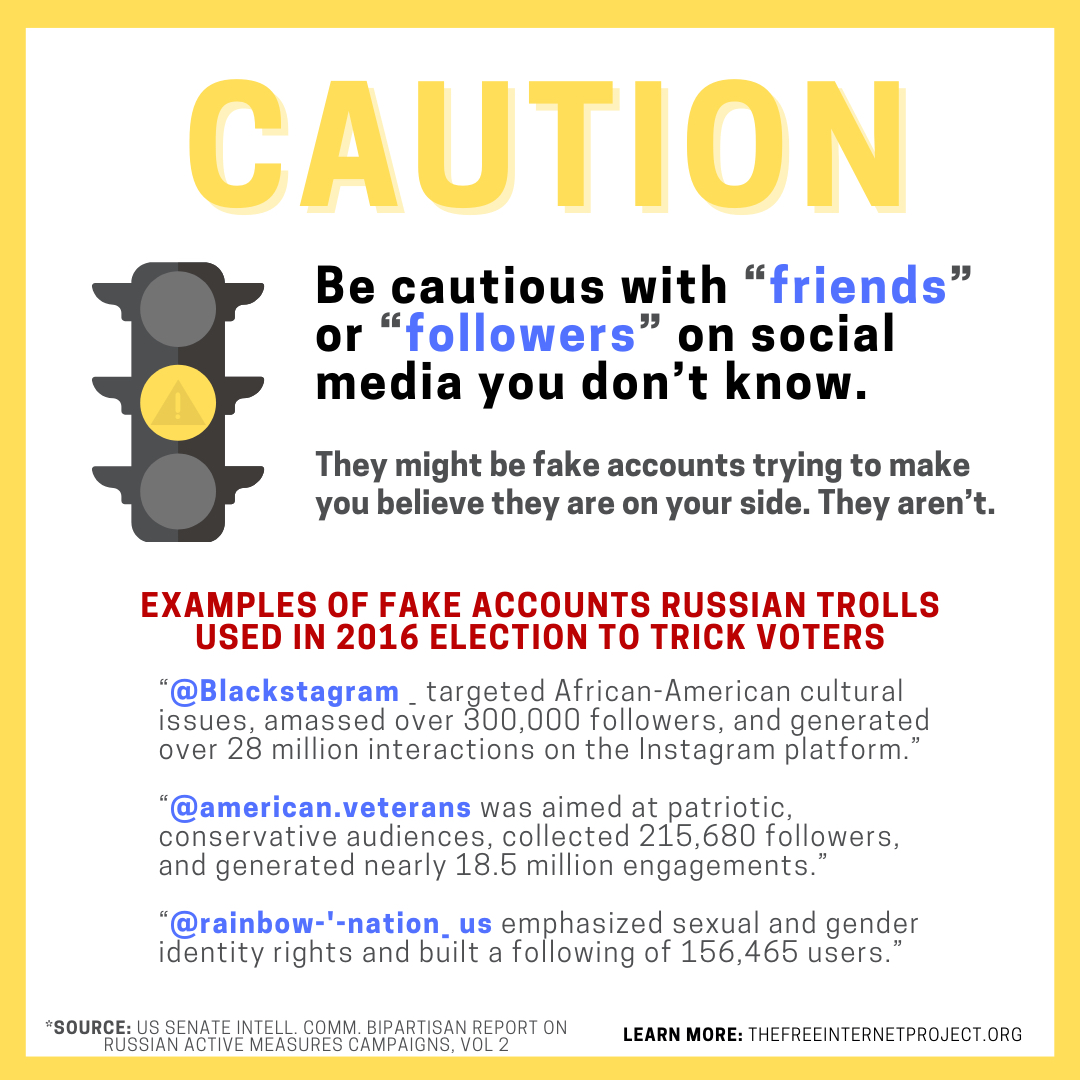

3. Caution: Be cautious with "friends" or "followers" on social media you don't know. They might be fake accounts trying to make you believe they are on your side. They aren't.

Many Americans may not realize that, in the 2016 election, Russian operatives posed as Americans on social media and acted like they shared your political views on both sides of the political spectrum. The bipartisan Senate Intelligence Report described in Volume 2, p. 3: "Masquerading as Americans, these operatives used targeted advertisements, intentionally falsified news articles, self-generated content, and social media platform tools to interact with and attempt to deceive tens of millions of social media users in the United States. This campaign sought to polarize Americans on the basis of societal, ideological, and racial differences, provoked real world events, and was part of a foreign government's covert support of Russia's favored candidate in the U.S. presidential election." We have used examples of posts from the fake accounts run by Russian operatives in the 2016, which were analyzed by the U.S. House of Representatives Permanent Select Committee on Intelligence as HPSCI Minority Open Hearing Exhibits. These examples show that the Russian operatives tried to trick Americans into believing they were on your side, so they could manipulate Americans. As the Senate Intelligence Committee explained in Volume 2 at p. 32-33: "In practice, the IRA's influence operatives dedicated the balance of their effort to establishing the credibility of their online personas, such as by posting innocuous content designed to appeal to like-minded users. This innocuous content allowed IRA influence operatives to build character details for their fake personas, such as a conservative Southerner or a liberal activist, until the opportune moment arrived when the account was used to deliver tailored 'payload content' designed to influence the targeted user. By this concept of operations, the volume and content of posts can obscure the actual objective behind the influence operation. 'If you're running a propaganda outfit, most of what you publish is factual so that you're taken seriously,' Graphika CEO and TAG researcher John Kelly described to the Committee, '[T]hen you can slip in the wrong thing at exactly the right time.'" In other words, your "friends" on social media may be your enemies trying to trick you.

4. Caution: The FBI warns don't fall for fake election results or fake news about voter fraud or irregularities in voting.

In their Sept. 22, 2020 public service announcement, the FBI and CISA warn that foreign actors and cybercriminals may attempt to spread disinformation about the 2020 election results or other false information "to discredit the electoral process and undermine confidence in U.S. democratic institutions. Given the pandemic, this year's voting may have increased use of mail-in ballots that may require greater time to tabulate. "Foreign actors and cybercriminals could exploit the time required to certify and announce elections’ results by disseminating disinformation that includes reports of voter suppression, cyberattacks targeting election infrastructure, voter or ballot fraud, and other problems intended to convince the public of the elections’ illegitimacy." We offer several tips drawn from the FBI and CISA public service announcement:

How to avoid fake election results or fake news of voting problems.

How to report election misinformation to social media companies or election crimes to the FBI.

-

“Report potential election crimes—such as disinformation about the manner, time, or place of voting—to the FBI. The FBI encourages victims to report information concerning suspicious or criminal activity to their local field office www.fbi.gov/contact-us/field-offices"

5. Go: These safe practices protect everyone on social media.

6. Go: Vote!

Election Day is Tuesday, November 3. Many states have early voting and mail-in ballots. The U.S. Election Assistance Committee provides a collection of state voting resources at https://www.eac.gov/.

Sources for the Public Service Announcements

We have studied the available information from U.S. government sources on the Russian interference in the 2016 U.S. election and on the current threat of foreign interference in the 2020 U.S. election. We have also reviewed the community standards of the major social media companies and their announced efforts to combat election misinformation and foreign interference. The primary sources of our public service announcements come from the following:

- FBI and CISA, Public Service Announcement, "Foreign Actors and Cybercriminals Likely to Spread Disinformation Regarding 2020 Election Results," Sept. 22, 2020.

- Report of the Select Committee on Intelligence United States Senate on Russian Active Measures Campaigns and Interference in the 2016 U.S. Election, Vol. 2: Russia's Use of Social Media with Additional View

- U.S. House of Representatives Permanent Select Committee on Intelligence, HPSCI Minority Open Hearing Exhibits

- We have also reviewed the community standards of all the major social media companies, as well as news reports of their ongoing efforts to stop election misinformation.

- We also reviewed the Department of Homeland Security's "Homeland Threat Assessment October 2020." which warns: " Threats to our election have been another rapidly evolving issue. Nation-states like China, Russia, and Iran will try to use cyber capabilities or foreign influence to compromise or disrupt infrastructure related to the 2020 U.S. Presidential election, aggravate social and racial tensions, undermine trust in U.S. authorities, and criticize our elected officials." (at 5)

Where you can report election crimes

Where you can report election misinformation on social media

Our Commitment to Nonpartisanship

The Free Internet Project is a Section 501(c)(3) organization. We steadfastly abide by the requirement of avoiding any political campaign on behalf of any candidate for public office. We believe that, in our democracy, every citizen's right to vote their preference should be respected. We have relied on U.S. government sources, including the current FBI and CISA, and the bipartisan Report of the U.S. Senate's Select Committee on Intelligence, to provide verified information. Our public service announcements are intended as a voter education guide, similar to the Sept. 22, 2020 public service announcement by the FBI and CISA. Nothing in our public service announcements should be interpreted as an endorsement or opposition to any candidate for any public office.