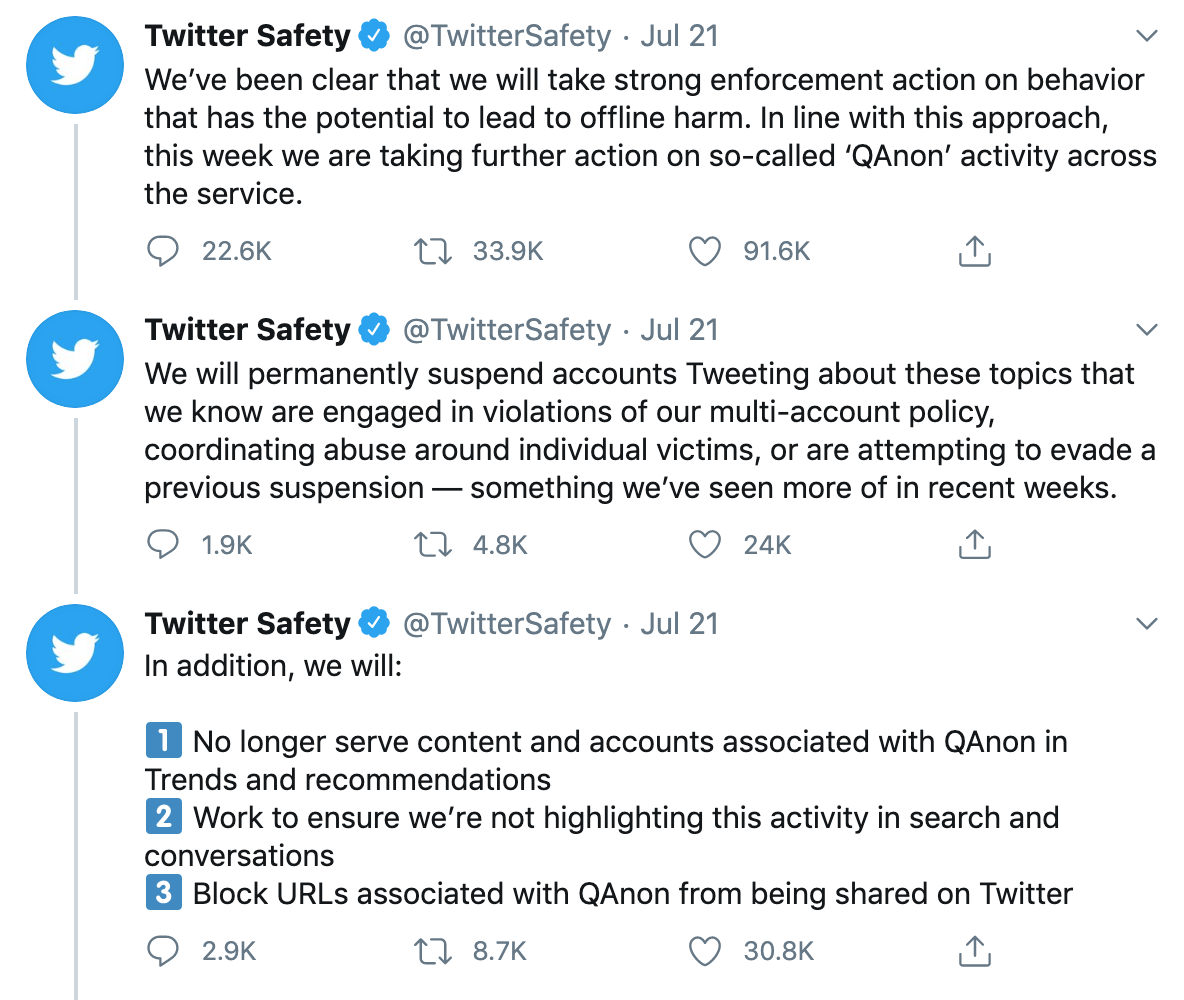

On July 21, 2020, Twitter suspended 7,000 accounts spreading QAnon conspiracy theories. In a tweet about the banning of these QAnon accounts, Twitter reiterated their commitment to taking "strong enforcement actions on behavior that has the potential to lead to offline harm." Twitter identified the QAnon accounts' violations of its community standards against "multi-account[s]," "coordinating abuse around individual victims," and "evad[ing] a previous suspension." In addition to the permanent suspensions, Twitter also felt it necessary to ban content and accounts "associated with Qanon" from the Trends and recommendations on Twitter, as well as to avoid "highlighting this activity in search and conversations." Further, Twitter will block "URLs associated with QAnon from being shared on Twitter."

These actions by Twitter are a bold step in what has been a highly contentious area concerning the role of social media platforms in moderating hateful or harmful content. Some critics suggested that Twitter's QAnon decision lacked notice and transparency. Other critics contended that Twitter's actions were too little to stop the "omnisconpiracy theory" that QAnon has become across multiple platforms.

So what exactly is QAnon? CNN describes the origins of QAnon, which began as a single conspiracy theory: QAnon "claim dozens of politicians and A-list celebrities work in tandem with governments around the globe to engage in child sex abuse. Followers also believe there is a 'deep state' effort to annihilate President Donald Trump." Forbes similarly describes: "Followers of the far-right QAnon conspiracy believe a “deep state” of federal bureaucrats, Democratic politicians and Hollywood celebrities are plotting against President Trump and his supporters while also running an international sex-trafficking ring." In 2019, an internal FBI memo reportedly identified QAnon as a domestic terrorism threat.

Followers of QAnon are also active on Facebook, Reddit, and YouTube. The New York Times reported that Facebook was considering takeingsteps to limit the reach QAnon content had on its platform. Facebook is coordinating with Twitter and other platforms in considering its decision; an announcement is expected in the next month. Facebook has long been criticized for its response, or lack of response, to disinformation being spread on its platform. Facebook is now the subject of a boycott, Stop Hate for Profit, calling for a stop to advertising until steps are taken to halt the spread of disinformation on the social media juggernaut. Facebook continues to allow political ads using these conspiracies on its site. Forbes reports that although Facebook has seemingly tried to take steps to remove pages containing conspiracy theories, a number of pages still remain. Since 2019, Facebook has allowed 144 ads promoting QAnon on its platform, according to Media Matters. Facebook has continuously provided a platform for extremist content; it even allowed white nationalist content until officially banning it in March 2019.

Twitter's crack down on QAnon is a step in the right direction, but it signals how little companies like Twitter and Facebook have done to stop disinformation and pernicious conspiracy theories in the past. As conspiracy theories can undermine effective public health campaigns to stop the spread of the coronavirus and foreign interference can undermine elections, social media companies appear to be playing a game of catch-up. Social media companies would be well-served by devoting even greater resources to the problem, with more staff and clearer articulation of its policies and enforcement procedures. In the era of holding platforms and individuals accountable for actions that spread hate, social media companies now appear to realize that they have greater responsibilities for what happens on their platforms.

--written by Bisola Oni