Section 230 of the Communications Decency Act [text] was enacted in 1996. Many commentators have hailed Section 230 as providing the legal landscape that facilitated the explosion of expression, businesses, social media, applications, and user-generated content on the Internet. The reason is that Section 230 shielded Internet platforms from potentially business-ending liability, while facilitating the development of new applications enabling individuals to publish their own content online--instantaneously and without the need for preclearance. As Wired's Matt Reynolds puts it, "It is hard to overstate how foundational Section 230 has been for enabling all kinds of online innovations. It’s why Amazon can exist, even when third-party sellers flog Nazi memorabilia and dangerous medical misinformation. It’s why YouTube can exist, even when paedophiles flood the comment sections of videos. And it’s why Facebook can exist even when a terrorist uses the platform to stream the massacre of innocent people. It allows for the removal of all of these bad things, without forcing the platforms to be legally responsible for them."

More recently, however, Section 230 has become a lightning rod, criticized by the Trump administration and others who disagree with shielding Internet platforms for the potentially unlawful or harmful content posted by their users. The Trump administration and conservative Republicans contend that Twitter and Google, for example, are engaging in biased content moderation that disfavors them for more liberal positions or politicians. Others criticize Section 230 as being too permissive in letting social media companies off the hook, even though there is so much disturbing and potentially dangerous content shared on their platforms. This article explains Section 230 and then the recent criticisms that the Trump administration and others have raised.

A. Brief Explanation of Section 230

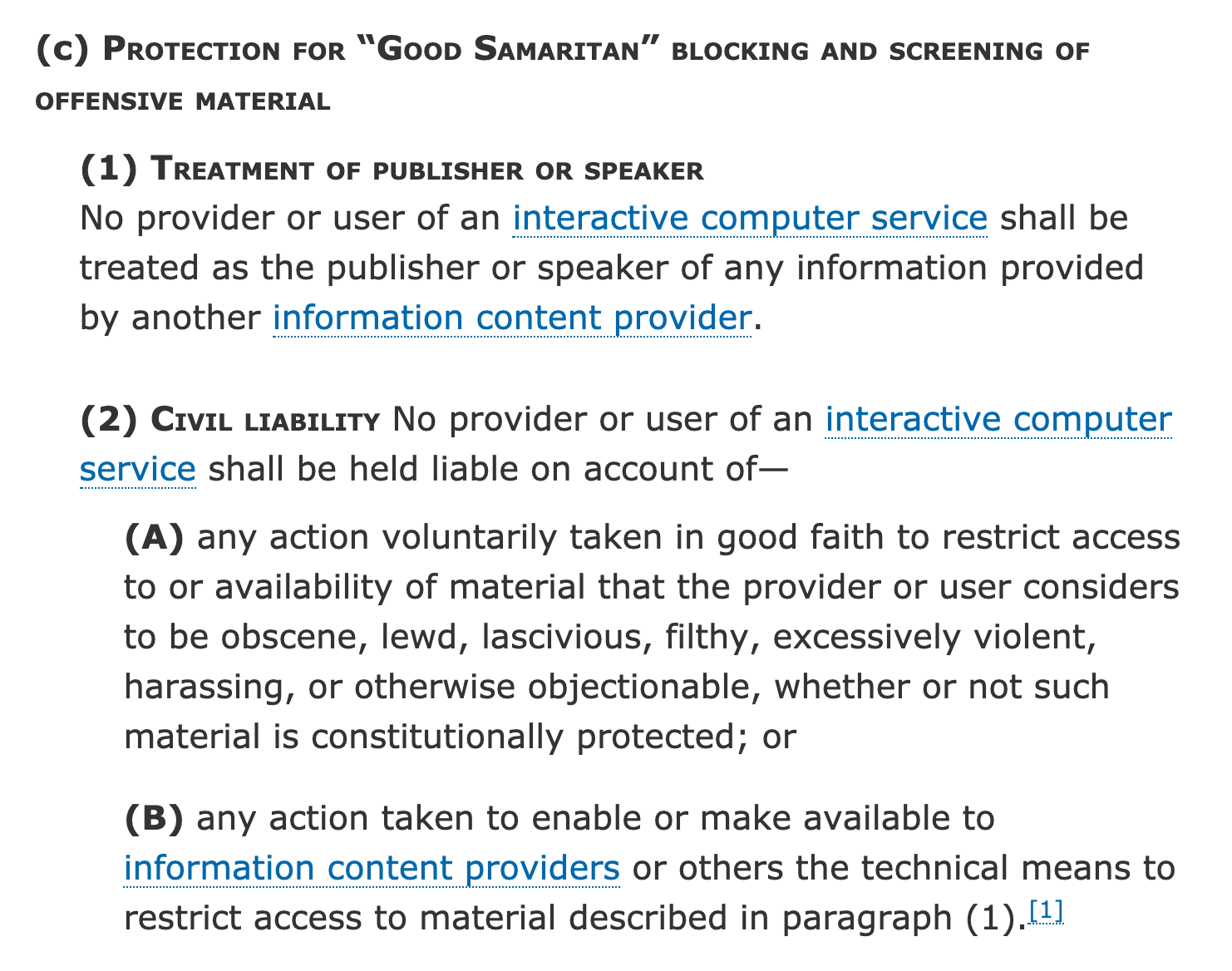

In subsection (c), Section 230 provides 2 different protections for Internet platforms (defined in the statute as "interactive computer services"). The provision is meant to protect from civil liability Internet companies engaging in so-called "Good Samaritan" blocking and screening of offensive material. The law was enacted in 1996 to address the perceived deficiencies of defamation law in exposing an Internet platform to defamation claims arising from content posted by its users if the Internet platform had engaged in some review and removal of objectionable user content on its platform. By contrast, if the Internet platform did nothing, and allowed all material to remain online without any review, the Internet platform could escape defamation claims because it would not be a publisher of the content, a requirement for a defamation claim under the now notorious Prodigy decision.

First, Section 230(c)(1) states in 26 words: "Treatment of publisher or speaker. No provider or user of an interactive computer service shall be treated as the publisher or speaker of any information provided by another information content provider." Thus, for a user's post on an Internet platform, such as a blog, the person who posted the blog is the publisher or speaker of the post. But the provider of the blog service (e.g., Wordpess) is not also considered a publisher of the post. In effect, this definition of publisher or speaker of online posts creates a kind of immunity for the Internet platform from defamation claims based on the content posted by users. To state a defamation claim, the defendant must have published the defamatory content. (Copyright infringement is handled by a different safe harbor, the DMCA safe harbor, which operates under a notice-and-takedown system for ISPs.)

Second, Section 230(c)(2), the lesser known provision, states:

Civil liability. No provider or user of an interactive computer service shall be held liable on account of—

(A) any action voluntarily taken in good faith to restrict access to or availability of material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected; or

(B) any action taken to enable or make available to information content providers or others the technical means to restrict access to material described in paragraph (1).

This section creates a second kind of immunity from civil liability for Internet platforms' voluntary efforts to take "in good faith to restrict access to or availability of material that the provider or user considers to be obscene, lewd, lascivious, filthy, excessively violent, harassing, or otherwise objectionable, whether or not such material is constitutionally protected." This activity is now called "content moderation" by an Internet platform. And it has become a politically charged issue in the United States.

B. Trump Administration Efforts to (Re)interpret or Reform Section 230

On May 26, 2020, Twitter added a fact-checking note to one of Donald Trump's tweets asserting that "There is NO WAY (ZERO!) Main-In Ballots will be anything less than substantially fraudulent" and claiming such fraud will occur in California. [link] Twitter then added a note "Get the facts about mail-in ballots" that stated: "Trump falsely claimed that mail-in ballots would lead to ‘a Rigged Election.’ However, fact-checkers say there is no evidence that mail-in ballots are linked to voter fraud.”

Trump responded by issuing an Executive Order on May 28, 2020 that purports to (re)interpret Section 230 to deny immunity to Internet platforms under subsections (c)(1) and (c)(2) if the Internet platforms to "censor content and silence viewpoints that they dislike." The Executive Order ties its interpretation to the "good faith" requirement in (c)(2) for "any action voluntarily taken in good faith to restrict access."

On May 29, 2020, Twitter flagged one of Donald Trump's tweets for violating its rules. Following protests by people in Minneapolis and other cities over the brutal death of George Floyd by police officer Derek Chauvin, Trump tweeted: "These THUGS are dishonoring the memory of George Floyd, and I won’t let that happen. Just spoke to Governor Tim Walz and told him that the Military is with him all the way. Any difficulty and we will assume control but, when the looting starts, the shooting starts. Thank you!" Above the tweet, Twitter added the following notation: "This Tweet violated the Twitter Rules about glorifying violence. However, Twitter has determined that it may be in the public’s interest for the Tweet to remain accessible." [link]

On June 17, 2020, the Department of Justice under Attorney General William Barr issued "Recommendations for Section 230 Reform." The Recommendations identify four areas for future reform of Section 230: (1) incentivizing online platforms to address illicit content, (2) clarifying federal government enforcement capabilities to address unlawful content, (3) promoting competition, and (4) promoting open discourse and greater transparency. Interestingly, the DOJ proposal stops short of adopting the same approach as Trump's Executive Order. Instead, DOJ recommends that Section 230 be amended to include a definition of good faith: "Second, the Department proposes adding a statutory definition of 'good faith,' which would limit immunity for content moderation decisions to those done in accordance with plain and particular terms of service and accompanied by a reasonable explanation, unless such notice would impede law enforcement or risk imminent harm to others. Clarifying the meaning of 'good faith' should encourage platforms to be more transparent and accountable to their users, rather than hide behind blanket Section 230 protections." DOJ's recommendation tacitly seems to indicate that, contrary to Trump's Executive Order, the meaning of "good faith" is not so clear.

C. Other Critics of Section 230

Other critics of Section 230 content that Section 230 gives Internet platforms or no incentive to moderate unlawful or harmful content. In contrast with Trump's criticism of political bias, this criticism is based on the view that Internet platforms should be removing more content--content that critics say is harmful or objectionable, such as revenge porn or illegal gun sales.

While it may well be time to examine whether Section 230 needs updating or refinements, the politically charged nature of today's debate creates the risk that sound policy will be lost amidst all the politics. We will preview some of the proposed bills in Congress to revise Section 230 in a subsequent article.